Services & Applications for Industry and Public Institutions

On October 21, 2025, the High-Performance Computing Center Stuttgart (HLRS) hosted the second Forum for Supercomputing & Future Technologies. Under the motto “Services & Applications for Industry and Public Institutions,” experts from research, industry, and the public sector came together to explore how high-performance computing (HPC) is driving digital innovation and transformation across domains.

After a warm welcome by Dr. Andreas Wierse (SIDE / SICOS BW GmbH), the day began with industrial use cases highlighting the digital transformation of SMEs. Erwin Schnell (AeroFEM GmbH) opened with „Der Weg ist das Ziel" , illustrating how small and medium-sized enterprises can leverage simulation and HPC to navigate the path toward digital maturity. Dr. Andreas Arnegger (OSORA Medical GmbH) followed with an impressive insight into HPC-assisted therapy planning for bone fracture treatment, showing how computational power directly benefits patient care.

In another striking example, Dr. Sebastian Mayer and Dr. Andrey Lutich (PropertyExpert GmbH) demonstrated how AI-based image recognition is revolutionizing automated invoice verification – a clear intersection between data science and high-performance computing.

After a short coffee break, Paul von Berg (Urban Monkeys GmbH / DataMonkey) shared his experience fine-tuning a geospatial LLM on HPC systems, sparking lively discussions among attendees. Daniel Gröger (alitiq GmbH) presented an FFplus-supported project using machine learning for short-term PV power forecasting, followed by Dr. Xin Liu (SIDE / Jülich Supercomputing Centre) , who showcased dam-break simulations and German Bight operation models – tangible examples of HPC applications in the public sector.

Before lunch, several key initiatives were introduced, including SIDE, FFplus, JAIF, HammerHAI, EDIH Südwest and EDIH-AICS. Together, they illustrated how research, funding, and industry are closely collaborating to enhance digital innovation and technological sovereignty in Germany and Europe.

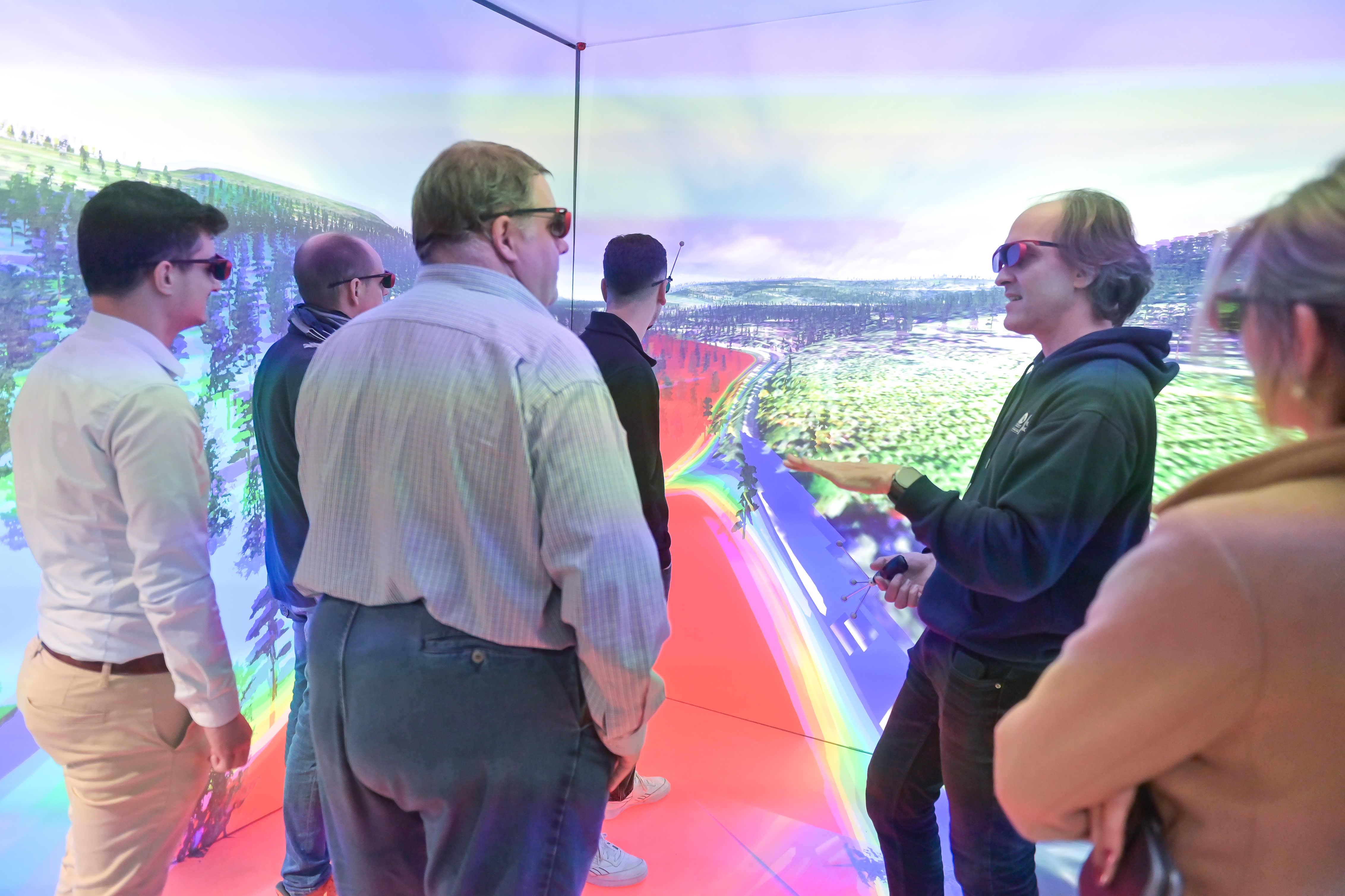

The afternoon program combined practical experience with networking. Participants could either join Speeddating with HPC, AI, and funding experts or take a data center tour to see HLRS infrastructure in action. Later, sessions included one-on-one expert consultations, a hands-on workshop „How to Use a Supercomputer: The Basics“ by Dr. Maksym Deliyergiyev , and a visualization workshop led by the HLRS Visualization Department, where participants experienced immersive data environments.

In closing, Dr. Andreas Wierse offered a look ahead to upcoming SIDE and EuroCC activities, emphasizing the growing role of collaboration and accessibility in supercomputing. The forum once again proved that HPC is no longer an exclusive domain of research institutions but a practical tool for innovation in both industry and the public sector.

The morning program of the second SIDE Forum can now be viewed below.