Services & Applications for Industry and Public Institutions

On October 21, 2025, the High-Performance Computing Center Stuttgart (HLRS) hosted the second Forum for Supercomputing & Future Technologies. Under the motto “Services & Applications for Industry and Public Institutions,” experts from research, industry, and the public sector came together to explore how high-performance computing (HPC) is driving digital innovation and transformation across domains.

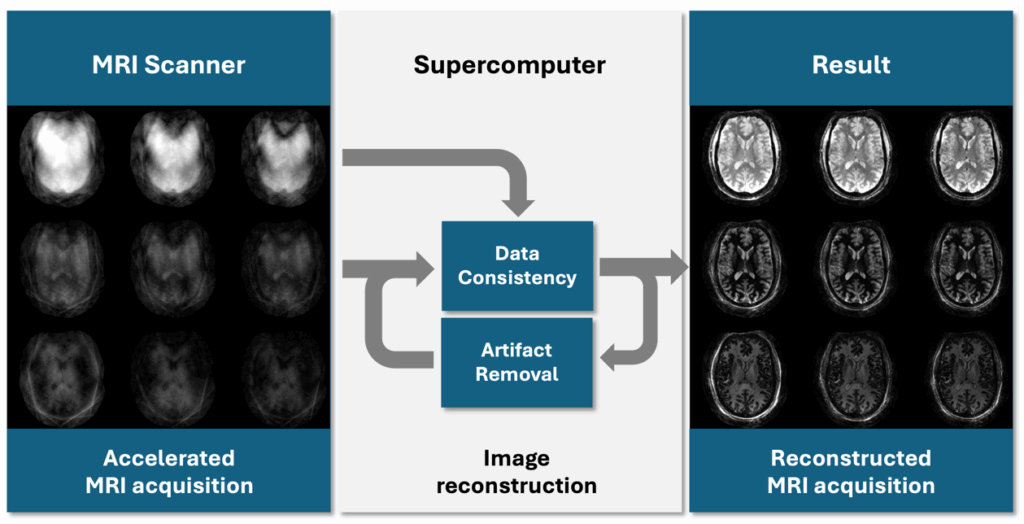

After a warm welcome by Dr. Andreas Wierse (SIDE / SICOS BW GmbH), the day began with industrial use cases highlighting the digital transformation of SMEs. Erwin Schnell (AeroFEM GmbH) opened with „Der Weg ist das Ziel" , illustrating how small and medium-sized enterprises can leverage simulation and HPC to navigate the path toward digital maturity. Dr. Andreas Arnegger (OSORA Medical GmbH) followed with an impressive insight into HPC-assisted therapy planning for bone fracture treatment, showing how computational power directly benefits patient care.

In another striking example, Dr. Sebastian Mayer and Dr. Andrey Lutich (PropertyExpert GmbH) demonstrated how AI-based image recognition is revolutionizing automated invoice verification – a clear intersection between data science and high-performance computing.

After a short coffee break, Paul von Berg (Urban Monkeys GmbH / DataMonkey) shared his experience fine-tuning a geospatial LLM on HPC systems, sparking lively discussions among attendees. Daniel Gröger (alitiq GmbH) presented an FFplus-supported project using machine learning for short-term PV power forecasting, followed by Dr. Xin Liu (SIDE / Jülich Supercomputing Centre) , who showcased dam-break simulations and German Bight operation models – tangible examples of HPC applications in the public sector.

Before lunch, several key initiatives were introduced, including SIDE, FFplus, JAIF, HammerHAI, EDIH Südwest and EDIH-AICS. Together, they illustrated how research, funding, and industry are closely collaborating to enhance digital innovation and technological sovereignty in Germany and Europe.

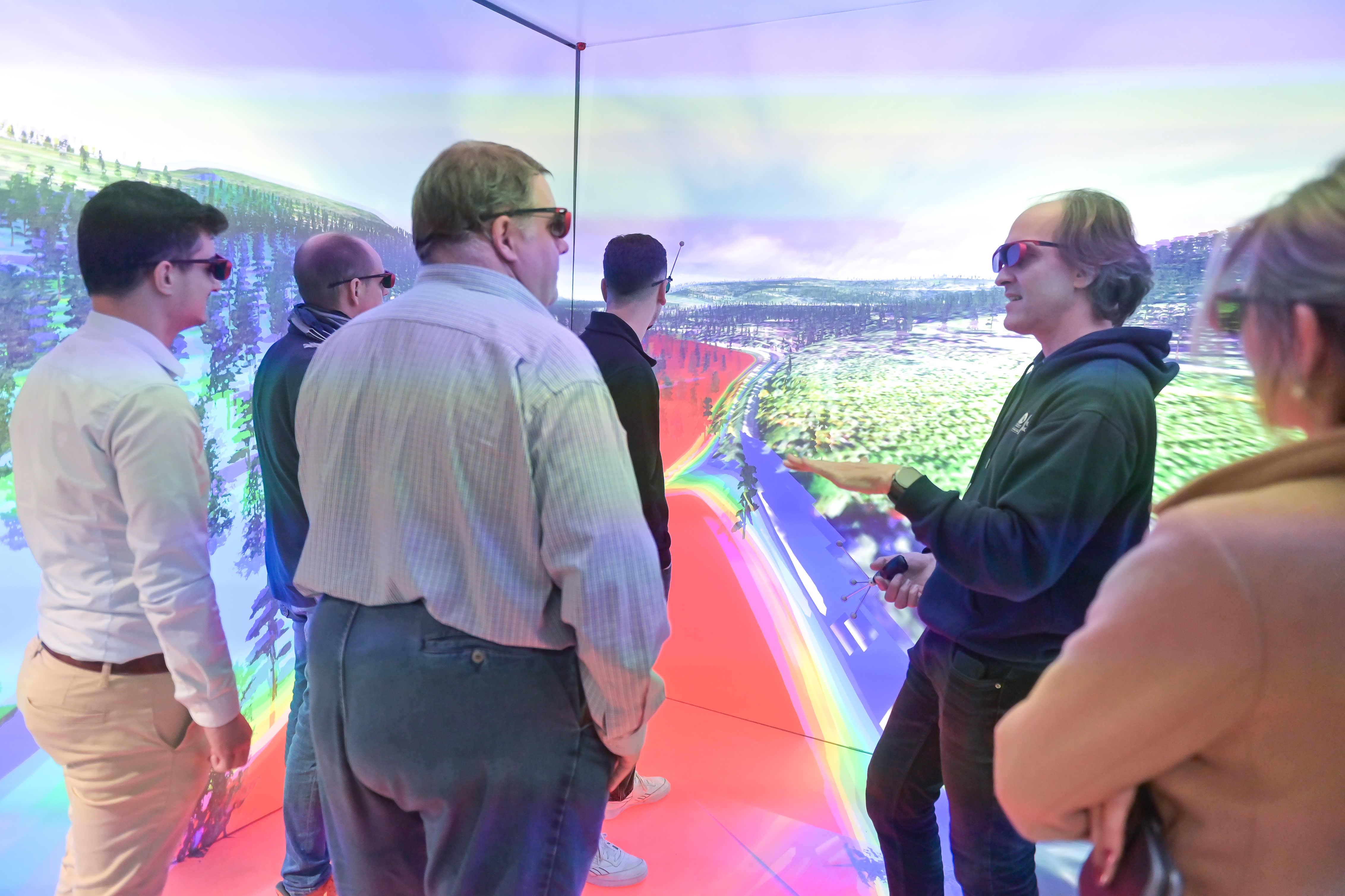

The afternoon program combined practical experience with networking. Participants could either join Speeddating with HPC, AI, and funding experts or take a data center tour to see HLRS infrastructure in action. Later, sessions included one-on-one expert consultations, a hands-on workshop „How to Use a Supercomputer: The Basics“ by Dr. Maksym Deliyergiyev , and a visualization workshop led by the HLRS Visualization Department, where participants experienced immersive data environments.

In closing, Dr. Andreas Wierse offered a look ahead to upcoming SIDE and EuroCC activities, emphasizing the growing role of collaboration and accessibility in supercomputing. The forum once again proved that HPC is no longer an exclusive domain of research institutions but a practical tool for innovation in both industry and the public sector.

The morning program of the second SIDE Forum can now be viewed below.

![Vergleichsdiagramm von Gehirn-MRT-Bildern, unterteilt in zwei Hauptabschnitte: Qualitatives MRT (links) und Quantitatives MRT (rechts). Unter „Qualitatives MRT“ gibt es eine Spalte mit drei graustufigen Gehirnabschnitten – axial (oben), sagittal (mittig) und koronal (unten) – die ein Strukturabbild mit willkürlicher Signalintensität zeigen. Eine Graustufen-Skala daneben reicht von -0,5 bis 0,5 und ist mit „Signal Intensity [a.u.]“ beschriftet. Unter dieser Spalte steht: „Structural image with arbitrary signal intensity.“ Der Bereich „Quantitatives MRT“ enthält vier Spalten, wobei jede die gleichen drei Gehirnansichten zeigt, jedoch mit unterschiedlichen Farbcodierungen und Messeinheiten: 1. Die erste Spalte zeigt farbkodierte Bilder von Blau (niedrig) bis Gelb (hoch), welche den „freien Wassergehalt in Prozent“ darstellen. Eine vertikale Skala rechts reicht von 0 bis 100 und ist mit „C_fw [%]“ beschriftet. Die Bildunterschrift lautet: „Free water content in percentage.“ 2. Die zweite Spalte zeigt Bilder in einer Farbskala von Blau/Grün (niedrig) bis Gelb (hoch) für die „Longitudinale Relaxationszeit in Millisekunden“, mit einer Skala von 0 bis 4000, beschriftet mit „T₁ [ms]“. Beschriftung: „Longitudinal relaxation time in milliseconds.“ 3. Die dritte Spalte verwendet eine Farbskala von Blau bis Gelb, um die „Effektive transversale Relaxationszeit in Millisekunden“ darzustellen, mit einer Skala von 0 bis 60, beschriftet mit „T₂* [ms]“. Die Beschriftung darunter lautet: „Effective transverse relaxation time in milliseconds.“ 4. Die vierte Spalte zeigt wieder Graustufenbilder, ähnlich wie beim qualitativen MRT, und stellt die „Magnetische Suszeptibilität in ppm“ dar. Die Skala reicht von -0,10 bis 0,10 und ist mit „χ [ppm]“ beschriftet. Darunter steht: „Magnetic susceptibility in ppm.“ Über den Bildern trennt eine waagerechte Linie die Überschriften: „Qualitatives MRT“ links und „Quantitatives MRT“ rechts. Jede Bilderspalte hat eine kurze, kursiv gedruckte Beschreibung des dargestellten Parameters und der jeweiligen physikalischen Einheit. Das Layout hebt den Kontrast zwischen einem einzelnen MRT-Scan mit willkürlicher Intensität und mehreren quantitativen MRT-Ansätzen hervor, die direkt kalibrierte, numerische Messwerte über die Eigenschaften des Gehirngewebes liefern.](https://supercomputing-in.de/wp-content/uploads/2025/08/QMRI-Fig2-1024x576.jpg)